Introduction to Artificial Intelligence

Artificial Intelligence (AI) is a branch of computer science that aims to create machines capable of intelligent behavior, simulating human cognitive functions such as learning, reasoning, problem-solving, and understanding natural language. The significance of AI in today’s world cannot be overstated, as it has permeated numerous aspects of daily life, revolutionizing industries ranging from healthcare to finance, transportation, and entertainment.

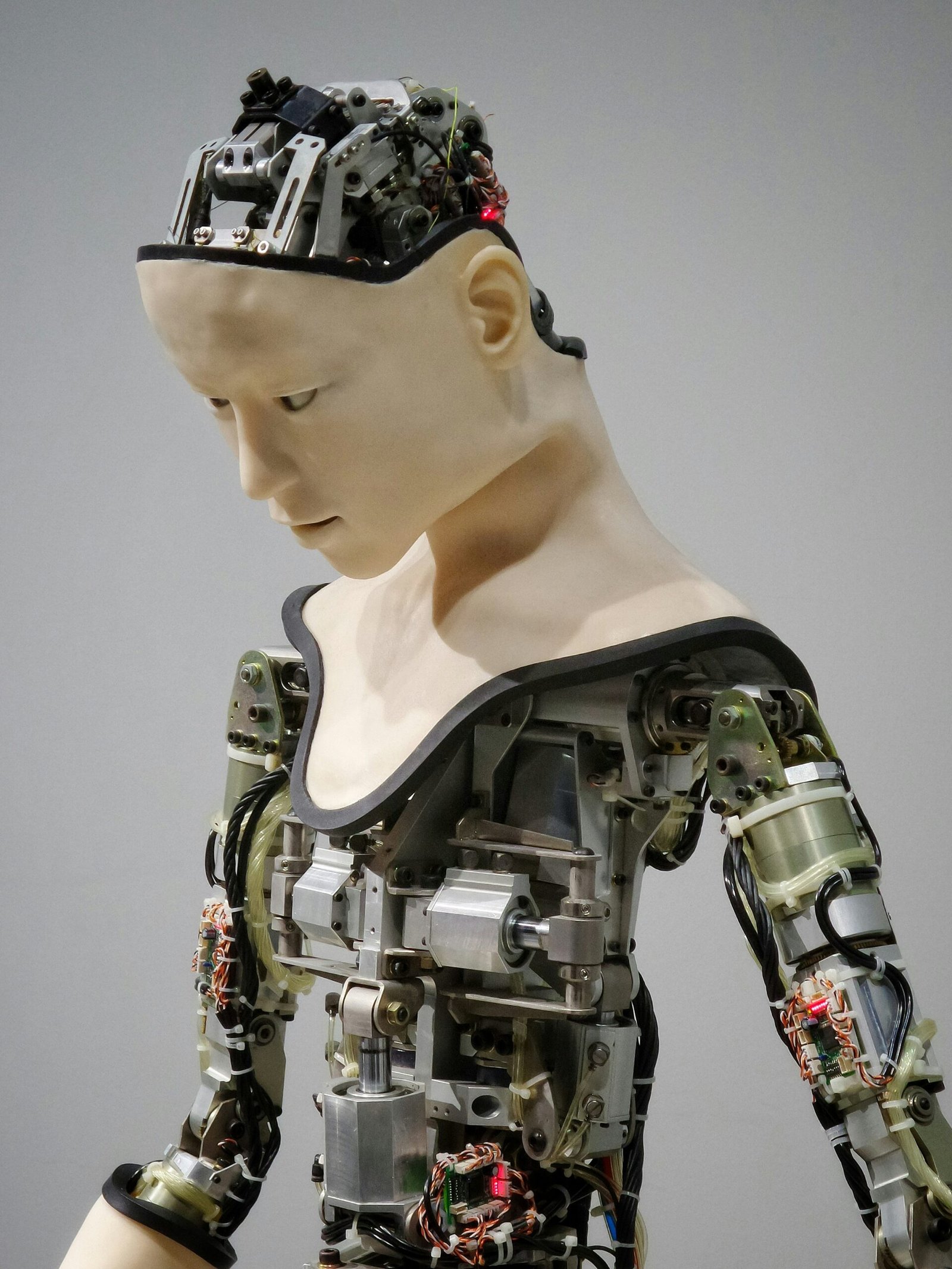

At its core, AI encompasses various domains, including but not limited to machine learning, natural language processing, robotics, and computer vision. Machine learning, a critical aspect of AI, focuses on the development of algorithms that allow computers to learn from and make predictions based on data. Natural language processing enables machines to understand and interpret human language, allowing for smoother interactions between humans and computers. Robotics integrates AI with physical machines to create systems that can perform tasks autonomously, while computer vision allows machines to interpret and process visual data, enhancing their ability to interact with the physical world.

The evolution of artificial intelligence has been marked by significant milestones, from early conceptualizations to advanced applications that increasingly mimic human actions and decisions. Understanding the historical context of AI offers valuable insights into its development, highlighting both technological advancements and the ethical considerations that come with the proliferation of intelligent systems. As AI continues to evolve, its impact on society will likely grow, prompting discussions around its implications for the future workforce, privacy, and human interactions. Thus, the study of AI is not merely a technical endeavor but also a crucial exploration of its intersection with human values and societal norms.

The Origins of AI: Early Concepts and Theories

The history of artificial intelligence (AI) is entrenched in the imagination and intellectual pursuits dating back to ancient civilizations. The aspiration to create intelligent machines can be traced through various myths and philosophies, where early thinkers explored constructs of automated beings endowed with reasoning and cognitive abilities. From the automata described by Greek engineers to the philosophical discussions by figures such as Aristotle on logic and reasoning, the foundations of AI were unknowingly being laid.

One of the most significant developments in the 20th century was the formalization of concepts that would define modern AI. Alan Turing, a British mathematician and logician, is revered as a pioneer in this field. In 1950, he introduced the Turing Test, which posed a framework for determining a machine’s ability to exhibit intelligent behavior equivalent to, or indistinguishable from, that of a human. This test remains a pivotal element of the discourse around machine intelligence and has been influential in guiding research and developing AI systems.

The mid-20th century marked a critical phase in the evolution of artificial intelligence. The Dartmouth Conference in 1956 is often cited as the birth of AI as a formal discipline, where leading researchers convened to explore new ideas and approaches in machine intelligence. The theoretical groundwork was laid by several key figures, including John McCarthy, Marvin Minsky, and Herbert Simon, who proposed various models for replicating human-like problem-solving capabilities in machines.

These early concepts and theories not only sparked interest and enthusiasm within the scientific community but also set in motion the quest for creating machines that could think. This exploration has evolved remarkably, resulting in a profound impact on technology and society. The drive to understand and replicate intelligence has progressed through numerous models and methodologies, continuing to shape the path of AI development to this day.

The Birth of AI: The Dartmouth Conference

The Dartmouth Conference, held in the summer of 1956, is often considered the seminal event that gave birth to the field of artificial intelligence (AI). This gathering of researchers was organized by John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon. The conference convened with the aspiration of exploring how machines could exhibit intelligent behavior, a theme that resonated throughout the proceedings and shaped the future trajectory of AI research.

During the conference, the participants engaged in discussions about the potential of machines to perform tasks traditionally requiring human intelligence. They proposed various projects aimed at simulating aspects of human cognition, including problem-solving, learning, and decision-making. Notable proposals included language processing and neural networks, which would later become foundational elements in the development of AI systems. The choice of Dartmouth College as the venue for the conference stemmed from its serene environment, conducive to deep thought and innovation.

Several key figures emerged from the conference who would later lead the charge in AI research. John McCarthy, who coined the term “artificial intelligence,” emphasized the need for rigorous approaches in AI development, while Marvin Minsky focused on developing machine learning algorithms. Claude Shannon’s influential work in information theory also provided the necessary groundwork for potential AI advancements. The collaborative atmosphere fostered by the conference encouraged an interdisciplinary approach, integrating computer science, psychology, cognitive science, and mathematics.

The Dartmouth Conference had lasting implications for the field of AI, effectively laying the groundwork for future research initiatives and technological advancements. It catalyzed the establishment of AI as a formal academic discipline, leading to the formation of dedicated research labs and increased interest from government and industry. The ideas and projects that originated at the conference fueled decades of inquiry and exploration, shaping the path of artificial intelligence into the multifaceted field it is today.

The Rise and Fall of Early AI Research

The progression of artificial intelligence (AI) can be segmented into several significant phases, with the early years witnessing a surge of interest and optimism that was ultimately met with challenges, commonly referred to as “AI winters.” This term describes periods during which enthusiasm for AI research waned significantly, primarily due to the gap between expectations and actual technological achievements. In the 1950s and 1960s, pioneering figures such as John McCarthy and Alan Turing fostered an environment ripe for exploration, leading to milestones such as the development of early neural networks and symbol manipulation programs.

This era was marked by ambitious goals, including the aspiration to create machines that could perform tasks considered uniquely human. However, as projects progressed, researchers faced technical limitations and a lack of computational power, which curtailed the deliverability of significant advancements. The excitement of 1960s AI research soon began to fade as funding agencies reevaluated their priorities, leading to the first major AI winter in the 1970s. The lack of tangible results led to disillusionment among stakeholders, who had anticipated rapid breakthroughs.

Revival of AI: The Machine Learning Era

The revival of artificial intelligence (AI) in the 1990s and 2000s marked a significant turning point in the field. This resurgence was driven primarily by advancements in machine learning techniques, which enabled computers to derive insights from large datasets, thus mimicking certain aspects of human cognition. One notable aspect of this era was the substantial increase in computational power, allowing more complex algorithms to be executed within feasible timeframes. The capability to process vast amounts of data opened new avenues for AI applications, fundamentally altering how machines interacted with information.

During this period, several algorithms gained prominence, significantly influencing the development of machine learning. Neural networks, in particular, saw a resurgence, utilizing multilayer structures to emulate how human neurons function. These networks were initially limited by the computational resources available, yet as technology progressed, they became capable of tackling complex problems such as image and speech recognition. Additionally, support vector machines emerged as a powerful supervised learning method for classification tasks, effectively aiding in decision-making processes across various industries.

Another key player in this evolution was the decision tree algorithm. This method provided a straightforward and intuitive approach to modeling decisions, making it accessible for practitioners in many fields. By representing potential outcomes as branches of a tree, it allowed for easy visualization of decision paths, making it particularly useful in environments where interpretability was necessary. The combination of these algorithms fostered a new understanding of data, prompting further research into hybrid models and ensemble techniques, which would later emerge as cornerstones of modern machine learning.

As the demand for AI capabilities grew, the synergy between algorithmic development, increased computing resources, and the availability of large datasets catalyzed an environment ripe for innovation. This period, therefore, not only revitalized AI but also set the stage for the technological advancements that followed in the subsequent years.

AI in the 21st Century: Breakthroughs and Innovations

The 21st century has witnessed an unprecedented transformation in artificial intelligence, marked by several groundbreaking innovations that have significantly advanced the field. The advent of deep learning, a subset of machine learning, has revolutionized how AI interprets and processes vast amounts of data. By utilizing neural networks with many layers, deep learning algorithms can learn hierarchical representations of data, enabling them to handle complex tasks such as image and speech recognition with remarkable accuracy.

Natural language processing (NLP) has also experienced considerable refinement, ushering in new capabilities for machines to understand and generate human language. Developments in NLP have empowered various applications, ranging from chatbots and virtual assistants to sophisticated translation software, thereby making human-computer interaction more seamless than ever. This progress has not only enhanced user experience but has also opened avenues for more accessible information retrieval and communication across language barriers.

Another noteworthy achievement is the use of AI in strategic games, exemplified by historic victories in games such as Go and chess. In 2016, Google DeepMind’s AlphaGo made headlines by defeating a reigning world champion in Go, a game renowned for its complexity and strategic depth. This achievement demonstrated AI’s capacity to not just process information and execute calculations, but also to engage in high-level strategic thinking and decision-making. Such milestones underscore the proficiency AI has gained in areas previously thought to be the exclusive domain of human intellect.

Apart from these breakthroughs, AI technologies have become ubiquitous in everyday applications. From personalized recommendations on streaming platforms to intelligent traffic management systems, AI is woven into the fabric of modern life. These advancements showcase the potential of artificial intelligence to transform industries and improve efficiency across various sectors, ultimately indicating a promising trajectory for future developments in the field.

AI Today: Applications and Impact

Artificial Intelligence (AI) has become an integral component of numerous industries, profoundly influencing how businesses operate and interact with consumers. In healthcare, AI is revolutionizing patient care through accurate diagnostics and personalized treatment plans. Machine learning algorithms analyze vast datasets, identifying patterns that can predict health issues before they become critical. For instance, AI-driven tools enable real-time monitoring of patients, ensuring prompt interventions based on predictive analytics.

In the finance sector, AI automates trading, detects fraud, and facilitates customer service through virtual assistants. Banks and financial institutions leverage AI algorithms to analyze market trends and client behaviors, enhancing decision-making and risk management. The efficiency of AI applications results in cost reduction and improved customer satisfaction; however, this evolution poses challenges related to job displacement and the ethical management of sensitive financial data.

Transportation is another domain significantly impacted by advancements in AI. The emergence of autonomous vehicles marks a profound shift in how we conceive mobility. Self-driving cars utilize AI technologies to navigate and respond to road conditions, aiming to reduce accidents and optimize traffic flow. While promising, these innovations raise ethical questions regarding liability in case of accidents and the societal impacts of widespread automation in transport systems.

The societal implications of AI extend beyond convenience to encompass ethical considerations. Issues surrounding data privacy, algorithmic bias, and accountability necessitate careful deliberation. While AI provides substantial benefits, such as efficiency and scalability, it also challenges existing norms and frameworks. As industries continue to adopt AI technologies, the dialogue surrounding their implications must evolve, ensuring that advancements align with societal values and ethical standards. The balance between innovation and responsibility will play a crucial role in shaping the future of AI and its impact on our daily lives.

Future of AI: Trends and Predictions

The future of artificial intelligence (AI) is poised to be shaped by several significant trends that reflect both advancements in technology and the evolving landscape of societal needs. One of the primary trends is the rise of collaborative AI, where machines and humans work together in increasingly harmonious ways. This paradigm shift enhances the decision-making process, employing AI algorithms to provide insights while leaving the final judgment to human experts. Such collaboration is expected to foster innovation across various sectors, including healthcare, finance, and education, leading to more efficient and personalized services.

Additionally, AI governance is gaining momentum, as the need for regulation becomes ever clearer. Discussions surrounding ethical frameworks and policies are crucial as the capabilities of AI systems grow. Policymakers are focusing on how to leverage the benefits of AI while addressing potential risks, including biases inherent in algorithms and the rule of law in data usage. Societal oversight will be instrumental in shaping a future where artificial intelligence contributes positively to public welfare, ensuring that technology is aligned with human values.

The integration of AI into everyday life is another trajectory that merits attention. From smart homes to autonomous vehicles and enhanced virtual assistants, AI is becoming an integral part of daily routines. However, this widespread adoption comes with challenges, particularly regarding privacy. As more personal data is collected, concerns over security and user rights grow. Striking a balance between utility and privacy will be crucial for the smooth integration of AI technologies into societal infrastructure.

Moreover, the prospect of job displacement due to automation has escalated discussions about the future workforce. While AI is expected to create new roles, it will also potentially render certain jobs obsolete. Consequently, addressing this challenge will require reskilling and upskilling initiatives to prepare the workforce for a future coexisting with AI.

Conclusion: The Journey Ahead

The historical journey of artificial intelligence (AI) is a testament to human ingenuity and perseverance. From its inception in the mid-20th century, the evolution of AI has been marked by groundbreaking developments and significant milestones. Early explorations laid the foundation for contemporary AI applications, which now permeate various industries, influencing decision-making processes, enhancing productivity, and even transforming everyday tasks. As we delve into the complexities of intelligent systems, it is imperative to recognize the milestones achieved, as well as the challenges yet to be overcome.

In recent years, AI has showcased remarkable advancements, particularly in areas such as machine learning and natural language processing. However, with these advancements come critical ethical considerations and responsibilities. The rapid deployment of AI technologies raises questions regarding bias, privacy, and accountability. Stakeholders must remain vigilant, ensuring that the evolution of artificial intelligence aligns with societal values and fosters inclusivity. The journey ahead requires a commitment to responsible innovation, where the benefits of AI are accessible to all while mitigating its potential risks.

As we stand on the brink of further advancements, individuals are encouraged to stay informed about AI developments and actively participate in discussions surrounding its impact. Understanding the implications of artificial intelligence on daily life is essential, not only for professionals in the field but for society at large. By fostering an informed public discourse, we can collectively navigate the challenges posed by the ongoing evolution of AI. It is our shared responsibility to advocate for ethical standards and policies that promote the responsible utilization of AI technologies, ensuring a balanced approach to innovation.